The buzz surrounding AI in broadcasting is getting louder as the year progresses. Why now? How mature is AI development throughout the industry? And what sort of projects are we going to see as it develops?

AI first started to have a notable impact on the broadcast sector in the wake of IBC2017. Back then we sketched out some definitions for the concepts involved and it’s worth doing again. This is especially true as not all applications and services out there that are being touted as AI showcase genuine artificial intelligence.

Here are some definitions:

Artificial Intelligence: Any software or device that reacts to its environment and alters its actions to maximize its chances of success at a given task. It thus appears intelligent. It is also often used as a catch-all term that encompasses the two terms below.

Machine Learning: Algorithms that can learn from data inputs and make accurate predictions/decisions as a result.

Deep Learning: The construction of evolving artificial neural networks, frameworks in which machine-learning algorithms can work collaboratively based on structures found in organic brains, to analyze large amounts of data and produce conclusions.

One of the main reasons you’re hearing a lot of buzz about AI this year is that the systems, particularly when it comes to AI and ML, are now in place and are transitioning off the drawing board and into real-world use cases. IBC recently ran a feature looking at AI in the broadcast industry and came across real-world projects as diverse as using AI to automatically generate metadata, generate intermediate frames and thus super slo-mo from regular camera feeds; analyze audience behaviour patterns, provide speech to text, refine complex workflows, and much more.

Much of it is to do with automation in the value chain and currently it is mostly this that is driving the broadcast industry uptake. Broadcast is a very process-oriented business and many of these processes — think of Quality Control, monitoring channels for rights purposes, conforming, closed caption generation, ingest… the list is a long one — map well into automation. The use of technology to increase speed and efficiency while at the same time cutting costs, is an attractive proposition.

So, where is AI in Broadcast in 2019?

First off, it has to be said that, at the time of writing, there is a lot of hyperbole regarding AI technologies and their impact on the value chain. A lot of what is being talked about is either projection, prototypes, or concerns projects that have a so far rather limited scope. The hype machine is in full flow as companies looking to leverage AI technologies look to raise funding and maximize interest.

This is not helped by a media that is fully buying into the more fanciful elements of the AI story. Sometimes these showcase legitimate concerns as well as rattling the funding tip jar, such as the story headlined ‘New AI fake text generator may be too dangerous to release, say creators’, which highlighted the problems of text-based deep fakes. On the whole, though, there is a depressing willingness to buy into the trope of the inanimate becoming animate and leading to disaster, which is a cultural artifact you can trace from Talmudic stories of golems, through Frankenstein, and on to the Terminator movies and SkyNet; a point which the same newspaper as the previous link adroitly made a few months earlier in a piece titled 'The discourse is unhinged': how the media gets AI alarmingly wrong’.

Indeed, in its introduction to a gated report examining the hype cycle in relation to AI, analyst Gartner wrote: “AI is almost a definition of hype. Yet, it is still early: New ideas will surface and some current ideas will not live up to expectations.” Away from there alarmist mainstream headlines the AI-based technologies it sees as currently sliding into the Trough of Disillusionment (see here for an explanation of the Hype Cycle phases) include such high-profile cases as Computer Vision, Autonomous Vehicles, Commercial Drones, and Augmented Reality.

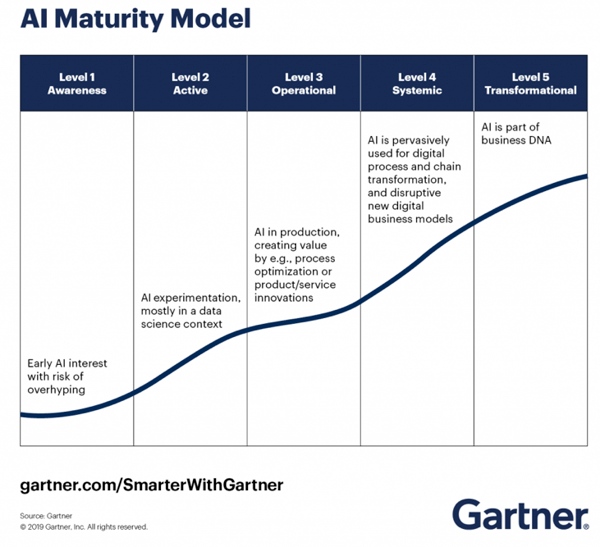

So, where are we in broadcast? Gartner has also produced a useful AI Maturity Model (see below) where companies and industries can measure their progress along a line that illustrates the growing deployment and impact of the technology.

As a whole, at this stage early in 2019 the broadcast industry is probably strung out in a line somewhere between the start of Level 2 and the early stages of Level 3. Level 2 is Active, and defined as AI appearing in proofs of concept and pilot projects, with meetings about AI focusing on knowledge sharing and the beginnings of standardization.

The Level 3 Operational stage sees at least one AI project having moved to production and best practices, while experts and technology are increasingly accessible to the enterprise. AI also has an executive sponsor within the organisation and a dedicated budget.

Things are moving swiftly though. IBC2017 was, after all, only eighteen months ago. But one of the accelerants for the introduction of technology is that the broadcast industry has been moving into the cloud at the same time. Companies no longer need to invest in their own infrastructure, hardware and software to implement AI in the value chain; they can outsource it via the cloud.

This is becoming easier to do than ever as well. As an illustration of what is available, AWS has a Free Tier that it bills as offering ‘Free offers and services you need to build, deploy, and run machine learning applications in the cloud’. The cloud-based machine learning services organizations and individuals can hook up to for no cost include:

- Text to speech: 5 million characters per month

- Speech to text: 60 minutes per month

- Image recognition: Analyze 5000 images and store 1000 face metadata per month

- Natural language processing: 5 million characters per month

Even the full costed versions can make a compelling argument. The Amazon Transcribe API is billed monthly at a rate of $0.0004 per second, for instance, meaning a transcript of a 60-minute show would cost $1.44. And, of course, though it is enormous and has a global reach, AWS is only one of a growing number of cloud-based companies offering AIaaS.

AI is Augmentation over Automation

One key point to make about AI in 2019 is that the industry is still largely working out the use cases. Automation is only the start of it, and indeed only looking at the places in a broadcast workflow where AI can automate processes is to underestimate the potential of the technology. AI holds out the promise of augmenting human actions, of being able to analyze information and make predictions based on those results faster than humans can.

That means when examining loci in a workflow where the technology can help, there are a few key considerations:

- Does expert knowledge add value?

- Is there a large amount of data to be processed?

- Is the organization looking to affect an outcome included in that data?

If the answer to all those three questions is yes, then that is a business point that can be further augmented by AI.

AI in Broadcasting: All About Context

As we’ve said, there are lots of use cases and projects involving AI currently underway across the industry, but we’ll end by highlighting one example of what can be achieved in the field, the UK’s Channel 4 and its trials of Contextual Moments. This is an AI-driven technology that uses image recognition and natural language processing to analyze scenes in pre-recorded content, producing a list of positive moments that can then be matched to a brand.

Low scoring moments are discarded, whilst all candidate moments are checked by humans to ensure brand safety. After that, to use C4’s example, a baking brand might previously have contextually advertised around a show such as ‘The Great British Bake-Off’, now it can be presented with a list of programs where baking happens in a positive light, from dramas to reality shows.

Channel 4’s initial testing with 2000 people showed that this AI-driven contextual version of targeted advertising, boosted brand awareness and doubled ad recall to 64%. It will be interesting to see how those results are mirrored in real world data.

As yet, the Level 3 ideal of AI having a dedicated budget within an organization is largely fanciful; we are at too early a stage for ROI data to be reliably collated. The tendency for it to be applied mainly for automation purposes limits its impact, even though cost reductions can be impressive. More complex projects and thus more strategic impacts are on the way, though.

Gartner’s Level 4 of AI implementation sees all new digital projects at least consider AI; new products and services have embedded AI, and AI-powered applications interacting productively (and, presumably, with a degree of autonomy) within the broadcast organization. Given the speed of the timescales so far, you wouldn’t want to bet against some of the companies at the forefront of AI development starting to push into that territory towards the end of the year.