In the past couple of years AI has had a profound impact on the broadcast industry. We talked to two of our Artificial Intelligence experts on where AI in broadcast is and where it may be going.

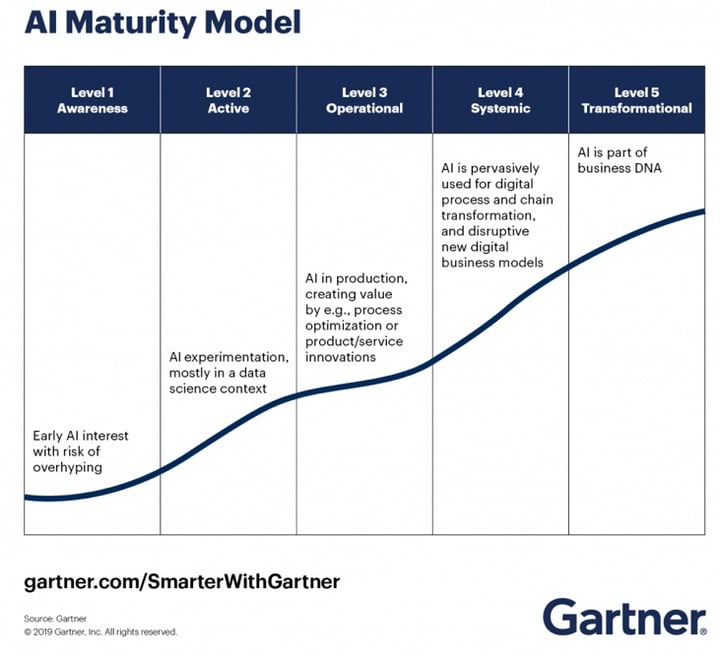

Three and a half years ago we published a blog post titled Why you are hearing a lot about AI in broadcasting this year which looked at the then buzz around AI, Machine Learning, and Deep Learning. We detailed how the industry was strung out in a line somewhere between the start of Level 2, which is characterised by experimentation, and the early stages of Level 3, characterised by production, in Gartner’s AI Maturity Model.

Three and a half years ago we published a blog post titled Why you are hearing a lot about AI in broadcasting this year which looked at the then buzz around AI, Machine Learning, and Deep Learning. We detailed how the industry was strung out in a line somewhere between the start of Level 2, which is characterised by experimentation, and the early stages of Level 3, characterised by production, in Gartner’s AI Maturity Model.

It’s worth reproducing that graphic below as we have moved forward rapidly since.

AI will be everywhere at IBC2022 and so pervasive has it become that some companies will no longer even mention it. Much like using computers or the internet it has become ubiquitous enough to not really be news anymore. Of course, AI is used to perform that task. How else would you do it?

At VO we have been one of the pioneers of deploying AI in the video management technology sphere and it is now at the core of much of our business and the way we will develop our solutions in the future. We spoke to two of our experts, CTO Alain Nochimowski, and Head of AI, Alice Wittenberg, about AI’s role in the company and the industry, now and into the future.

Q: AI has become very much part of our business over the past few years. Where are we with the technology?

Alain Nochimowski: Where we are now is in production mode; in the industrial mode of AI. AI now infuses most of our TV/video product lines. It is in security allowing us to spot pirate and websites illegally redistributing content automatically; it is in segmentation where we cluster our audience together to produce the segments that are needed for targeted advertising; and, among other projects, we are notably working on preventive maintenance, trying to predict in advance events such as the failure of the systems we monitor with what is known as AIOps applied to the TV domain. Most of the focus now is no longer in trying to obtain the data or engineer the data, it's more about streamlining all this. It’s one thing to have a model in the lab of an ideal system, but when Alice designs a model she knows that it is going to change when it hits the real world. The model has to integrate with the operational aspects right away, and that's the way it has to be designed as we move into the industrial application of AI.

Alice Wittenberg: Models were initially developed in the lab based on our historical data. Nonetheless, it has evolved and changed as new data has accumulated over time. Our original model is different from the one we have now, two years later.

AN: There is also linked progress with the way we handle data. The old-fashioned monolithic view of data as the ‘data lake’ that's draining somewhere? That also is changing. We are decentralising the data collection, decentralising the data governance to the different product lines, and setting up a genuine data mesh. AI is now firmly integrated into our products wherever appropriate.

Q: Are the models still being developed in isolation or are we at the point where there is a single VO AI that we repurpose for different purposes?

AW: Models can be very cross-cutting, such as constructing a segment of general behavior, or very specific, such as monitoring failure. Therefore, you have a model for each system that is trained based on the specific system, has specific thresholds and provides specific results. Putting it on another system will not work..

AN: Our systems produce data and we tailor the AIs to address specific business issues, working with that data and that system, optimising the processes involved over time. That's what the team does. A lot of the added value of our data scientist team stems for this inner, domain-specific knowledge of both the data produced by our TV systems and the business issues we are trying to solve. That being said, I also think that we need to be cognizant that even when we completely master the data, we completely master the AI, we understand that it doesn't solve absolutely everything. There is a long learning curve to master this kind of discipline.

AW: You can’t spend two minutes to design model in the lab and say that that is it. All the time you are improving and to extract the first model takes a lot of data and then we are monitoring the results constantly. Models are no longer static; they change completely and quickly. Data is dynamic and even over the course of three weeks the system evolves from what it was.

The model is evolving and living inside the system. Today you can’t develop model deploying it and forget about it

Q: The industry has come a long way in a fairly short time, and VO in particular. What has been the driving force here?

AN: I think we are pretty advanced in the mastery of the stacks, in the mastery of how we deploy models. First and foremost this has to do with our people. Over time, VO has managed to develop and nurture very skilled and talented data engineering and data science teams. This has to do as well with our decision to move to the cloud. The reason why we were able to accelerate AI development two or three years ago is because we decided that the cloud would be a core environment to develop our data capabilities. The cloud allows us to use as much capacity or processing power that we needed on demand — and sometimes models use a lot of capacity. We were restricted in a pure on-prem operation — we couldn't think big, we couldn’t scale. The cloud allows us to scale. It also allows us to store the data and to be always up to date in terms of the data stack. It's evolved so, so fast. And that raises new questions; each cloud has its own specificities. So which one should you choose? Or should you go with meta-approaches that span over multiple clouds and provides some abstraction layer. These are new questions that the industry has to address.

Q: The roadmap always used to be about AI leading to Machine Learning and then on to Deep Learning. Is that still the case?

AW: There is a lot of data, and we are working at a large scale. The cloud will enable us to use a cluster of machines in order to maintain the data and do all the processing. In the past, we were limited to using machine learning and the models that are more limited in the terms of CPU and GPU. Now we are not afraid to run a deep learning model because we can maintain this but if we are talking about on premise companies, I don't think it's an option.

AN: Deep Learning (and neural networks in general) supposedly spares us the step of feature engineering, because the model itself would select the right features on which to train. But I don't think it's a general recipe that fits all the needs. With deep learning, you are sometimes lacking explainability. Why is the model selecting this? It’s not always explainable. If you have an anomaly that you want to correct, it’s not always obvious how to do it or what has caused it. And the second point is about sustainability. When you want to be a bit more cautious in terms of processing power (think about the notion of “greener AI”), you might not want to go directly to deep learning.

Alice: If with deep learning you improve with 2% more than machine learning, but you need a cluster of machines instead of one machine, so maybe that is not worth the effort in terms of costs and sustainability.

Q: We’ve come a long way in three years. Where do you think we will be in five?

AW: Up to now we detect the problem, justify it, classify it manually, and then solve it. But that will become an automatic process: the AI will identify the problem, will classify it, and also prevent it or will fix it on the way. All of this will be handled by the system automatically, detecting, fixing and documenting it over system logs, and the end user/customer will be informed about it.

AN: I'm an optimist. I'm really impressed by what can be done that the sky's the limit. But at the same time, we must abide by all the regulations about data. We have to be ethical in terms of the way we manage data and ethical in the way we manage the resources in terms of processing. These are the natural limits we should impose on ourselves.

The future of AI

So, where are we now on the Gartner AI maturity model? The phases it references are perhaps rather conceptual and high level, but taking everything across the industry into consideration we have probably progressed from a point between levels 2 and 3 in 2019 to one today where we are between levels 3 and 4. That is fairly swift progress, especially when you consider the disruptive effect the Covid pandemic had on many technology roadmaps.

Certainly at IBC we will see AI integrated into more and more aspects of the broadcast workflow. And with AI-driven deep video coding looking like it may transform the efficiency of video codecs in the future, it will steadily have a greater impact on all of our lives, both in the industry and as consumers.

To hear more about our latest features and the way that AI is helping to shape them, meet us on Stand 1.A51 at IBC2022.